⚡ AlmaLinux High Availability: Complete Pacemaker Cluster Setup Guide

Hey there, uptime champion! 🎉 Ready to build a bulletproof high availability cluster that never goes down? Today we’re creating a Pacemaker cluster on AlmaLinux that guarantees 99.99% uptime and automatic failover! 🚀

Whether you’re protecting critical databases, web services, or enterprise applications, this guide will turn your AlmaLinux servers into an unbreakable fortress of availability! 💪

🤔 Why is High Availability Important?

Imagine your critical service going down during peak business hours – lost revenue, angry customers, and sleepless nights! 😱 High availability ensures your services keep running even when hardware fails!

Here’s why Pacemaker clusters on AlmaLinux are essential:

- 🛡️ Zero Downtime - Services stay online during failures

- ⚡ Automatic Failover - Switch to backup servers in seconds

- 🔄 Load Distribution - Balance workload across multiple nodes

- 💾 Data Protection - Synchronized storage prevents data loss

- 📊 Health Monitoring - Continuous service health checks

- 🎯 Resource Management - Intelligent resource allocation

- 💰 Cost Effective - Use commodity hardware for enterprise reliability

- 🚀 Scalability - Add nodes without service interruption

🎯 What You Need

Before we build your HA fortress, let’s gather our resources:

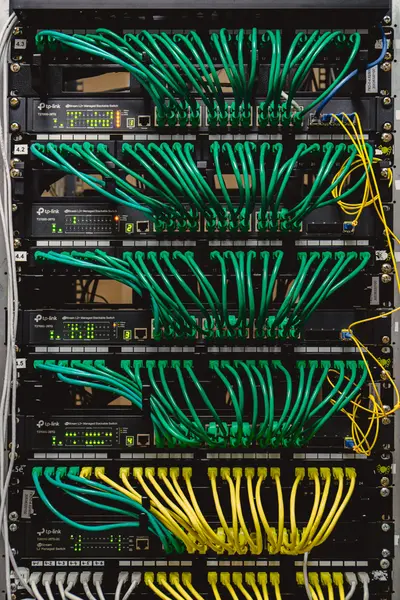

✅ 2-3 AlmaLinux 9.x servers (identical hardware recommended) ✅ Shared storage (SAN, NFS, or DRBD) ✅ Network connectivity between all nodes ✅ Static IP addresses for all cluster nodes ✅ Fencing device (IPMI, iLO, or network switch) ✅ Virtual IP for service access ✅ Time synchronization (NTP crucial!) ✅ Dedicated cluster network (recommended) 🌐

📝 Step 1: Prepare Cluster Nodes

Let’s prepare our AlmaLinux servers for clustering! 🎯

# On ALL cluster nodes, run these commands:

# Set hostnames (adjust for each node)

sudo hostnamectl set-hostname node1.cluster.local # node1

sudo hostnamectl set-hostname node2.cluster.local # node2

sudo hostnamectl set-hostname node3.cluster.local # node3

# Configure /etc/hosts on ALL nodes

sudo tee -a /etc/hosts << 'EOF'

192.168.1.10 node1.cluster.local node1

192.168.1.11 node2.cluster.local node2

192.168.1.12 node3.cluster.local node3

192.168.1.100 cluster-vip.cluster.local cluster-vip

EOF

# Disable SELinux (for simplicity - can be configured for production)

sudo setenforce 0

sudo sed -i 's/SELINUX=enforcing/SELINUX=permissive/' /etc/selinux/config

# Configure firewall for cluster communication

sudo firewall-cmd --permanent --add-service=high-availability

sudo firewall-cmd --permanent --add-port=2224/tcp

sudo firewall-cmd --permanent --add-port=3121/tcp

sudo firewall-cmd --permanent --add-port=21064/tcp

sudo firewall-cmd --permanent --add-port=5405/udp

sudo firewall-cmd --reload

# Install cluster packages

sudo dnf install -y pacemaker corosync pcs fence-agents-all

# Enable and start pcsd

sudo systemctl enable pcsd

sudo systemctl start pcsd

# Set hacluster user password (same on all nodes)

echo "ClusterPassword123" | sudo passwd --stdin haclusterPerfect! All nodes are prepared! 🎉

🔧 Step 2: Create and Configure Cluster

Now let’s create our Pacemaker cluster:

# Run these commands from node1 ONLY:

# Authenticate all cluster nodes

sudo pcs host auth node1.cluster.local node2.cluster.local node3.cluster.local \

-u hacluster -p ClusterPassword123

# Create the cluster

sudo pcs cluster setup mycluster \

node1.cluster.local node2.cluster.local node3.cluster.local

# Start cluster on all nodes

sudo pcs cluster start --all

# Enable cluster to start on boot

sudo pcs cluster enable --all

# Check cluster status

sudo pcs status

# Configure cluster properties

sudo pcs property set stonith-enabled=false # We'll enable this later

sudo pcs property set no-quorum-policy=ignore

sudo pcs property set default-resource-stickiness=100

# Configure cluster settings

sudo pcs cluster cib /tmp/cluster_config

# Verify cluster is running

sudo pcs cluster status

sudo crm_mon -1Excellent! Our cluster is running! 🌟

🌟 Step 3: Configure Shared Storage with DRBD

Let’s set up DRBD for shared storage replication:

# Install DRBD on ALL nodes

sudo dnf install -y drbd90-utils kmod-drbd90

# Create DRBD configuration (on ALL nodes)

sudo tee /etc/drbd.d/cluster-data.res << 'EOF'

resource cluster-data {

protocol C;

on node1.cluster.local {

device /dev/drbd0;

disk /dev/sdb1;

address 192.168.1.10:7789;

meta-disk internal;

}

on node2.cluster.local {

device /dev/drbd0;

disk /dev/sdb1;

address 192.168.1.11:7789;

meta-disk internal;

}

on node3.cluster.local {

device /dev/drbd0;

disk /dev/sdb1;

address 192.168.1.12:7789;

meta-disk internal;

}

handlers {

fence-peer "/usr/lib/drbd/crm-fence-peer.sh";

after-resync-target "/usr/lib/drbd/crm-unfence-peer.sh";

}

net {

cram-hmac-alg sha1;

shared-secret "MySecretKey123";

}

disk {

on-io-error detach;

fencing resource-only;

}

syncer {

rate 100M;

}

}

EOF

# Initialize DRBD metadata (on ALL nodes)

sudo drbdadm create-md cluster-data

# Start DRBD service (on ALL nodes)

sudo systemctl enable drbd

sudo systemctl start drbd

# Make node1 primary (ONLY on node1)

sudo drbdadm primary cluster-data --force

# Create filesystem (ONLY on node1)

sudo mkfs.ext4 /dev/drbd0

# Check DRBD status

sudo drbdadm status cluster-data✅ Step 4: Configure Resources and Services

Let’s configure cluster resources for high availability:

# Create Virtual IP resource

sudo pcs resource create cluster-vip IPaddr2 \

ip=192.168.1.100 \

cidr_netmask=24 \

op monitor interval=30s

# Create DRBD resource

sudo pcs resource create cluster-drbd ocf:linbit:drbd \

drbd_resource=cluster-data \

op monitor interval=60s

# Create filesystem resource

sudo pcs resource create cluster-fs Filesystem \

device=/dev/drbd0 \

directory=/srv/cluster \

fstype=ext4 \

op monitor interval=20s

# Create Apache web server resource

sudo pcs resource create cluster-web apache \

configfile=/etc/httpd/conf/httpd.conf \

statusurl="http://localhost/server-status" \

op monitor interval=1min

# Create resource group for proper ordering

sudo pcs resource group add cluster-services \

cluster-drbd cluster-fs cluster-vip cluster-web

# Configure constraints

sudo pcs constraint order cluster-drbd then cluster-fs

sudo pcs constraint order cluster-fs then cluster-vip

sudo pcs constraint order cluster-vip then cluster-web

# Configure colocation (resources stay together)

sudo pcs constraint colocation add cluster-fs with cluster-drbd INFINITY

sudo pcs constraint colocation add cluster-vip with cluster-fs INFINITY

sudo pcs constraint colocation add cluster-web with cluster-vip INFINITY

# Check resource status

sudo pcs status resources🎮 Quick Examples

Example 1: Database High Availability

# Create MySQL/MariaDB HA resource

sudo pcs resource create cluster-mysql mysql \

binary="/usr/bin/mysqld_safe" \

config="/etc/my.cnf" \

datadir="/srv/cluster/mysql" \

pid="/srv/cluster/mysql/mysqld.pid" \

socket="/srv/cluster/mysql/mysql.sock" \

op start timeout=60s \

op stop timeout=60s \

op monitor interval=20s timeout=30s

# Add to resource group

sudo pcs resource group add db-services \

cluster-drbd cluster-fs cluster-mysql cluster-vip

# Create database backup script

cat > /usr/local/bin/cluster-db-backup.sh << 'EOF'

#!/bin/bash

# Cluster Database Backup

BACKUP_DIR="/srv/cluster/backups"

DATE=$(date +%Y%m%d_%H%M%S)

mkdir -p $BACKUP_DIR

# Backup database

mysqldump --all-databases --single-transaction > $BACKUP_DIR/full_backup_$DATE.sql

# Compress backup

gzip $BACKUP_DIR/full_backup_$DATE.sql

# Clean old backups (keep 7 days)

find $BACKUP_DIR -name "*.sql.gz" -mtime +7 -delete

echo "Database backup completed: $BACKUP_DIR/full_backup_$DATE.sql.gz"

EOF

chmod +x /usr/local/bin/cluster-db-backup.shExample 2: Advanced Fencing Configuration

# Configure IPMI fencing (replace with your IPMI details)

sudo pcs stonith create fence-node1 fence_ipmilan \

pcmk_host_list="node1.cluster.local" \

ip="192.168.2.10" \

username="admin" \

password="ipmipass" \

lanplus=1

sudo pcs stonith create fence-node2 fence_ipmilan \

pcmk_host_list="node2.cluster.local" \

ip="192.168.2.11" \

username="admin" \

password="ipmipass" \

lanplus=1

sudo pcs stonith create fence-node3 fence_ipmilan \

pcmk_host_list="node3.cluster.local" \

ip="192.168.2.12" \

username="admin" \

password="ipmipass" \

lanplus=1

# Enable stonith

sudo pcs property set stonith-enabled=true

# Test fencing (BE CAREFUL!)

# sudo pcs stonith fence node2.cluster.localExample 3: Cluster Monitoring and Alerting

# Create cluster monitoring script

cat > /usr/local/bin/cluster-monitor.sh << 'EOF'

#!/bin/bash

# Comprehensive Cluster Monitoring

LOG_FILE="/var/log/cluster-monitor.log"

ALERT_EMAIL="[email protected]"

echo "$(date): Starting cluster monitoring check" >> $LOG_FILE

# Check cluster status

CLUSTER_STATUS=$(sudo pcs status 2>/dev/null | grep -c "OFFLINE\|FAILED\|ERROR")

if [ $CLUSTER_STATUS -gt 0 ]; then

echo "⚠️ CLUSTER ALERT: Issues detected!"

sudo pcs status | mail -s "Cluster Alert - $(hostname)" $ALERT_EMAIL

echo "$(date): ALERT - Cluster issues detected" >> $LOG_FILE

else

echo "✅ Cluster status: Healthy"

echo "$(date): Cluster status healthy" >> $LOG_FILE

fi

# Check resource status

FAILED_RESOURCES=$(sudo pcs status resources 2>/dev/null | grep -c "FAILED\|Stopped")

if [ $FAILED_RESOURCES -gt 0 ]; then

echo "⚠️ RESOURCE ALERT: Failed resources detected!"

sudo pcs status resources | mail -s "Resource Alert - $(hostname)" $ALERT_EMAIL

echo "$(date): ALERT - Failed resources detected" >> $LOG_FILE

fi

# Check DRBD status

DRBD_STATUS=$(sudo drbdadm status cluster-data 2>/dev/null | grep -c "StandAlone\|WFConnection")

if [ $DRBD_STATUS -gt 0 ]; then

echo "⚠️ DRBD ALERT: DRBD synchronization issues!"

sudo drbdadm status cluster-data | mail -s "DRBD Alert - $(hostname)" $ALERT_EMAIL

echo "$(date): ALERT - DRBD issues detected" >> $LOG_FILE

fi

# Resource usage monitoring

echo "📊 Resource Usage:"

echo "CPU: $(top -bn1 | grep "Cpu(s)" | awk '{print $2}' | awk -F'%' '{print $1}')%"

echo "Memory: $(free | grep Mem | awk '{printf "%.1f%%", $3/$2 * 100.0}')"

echo "Disk: $(df -h /srv/cluster | tail -1 | awk '{print $5}')"

echo "$(date): Monitoring check completed" >> $LOG_FILE

EOF

chmod +x /usr/local/bin/cluster-monitor.sh

# Schedule monitoring every 5 minutes

(crontab -l 2>/dev/null; echo "*/5 * * * * /usr/local/bin/cluster-monitor.sh") | crontab -🚨 Fix Common Problems

Problem 1: Cluster Split-Brain

# Check cluster quorum

sudo pcs quorum status

# Force cluster online (DANGEROUS - use carefully)

sudo pcs cluster start --force-start

# Fix split-brain by choosing primary node

sudo drbdadm secondary cluster-data # On secondary nodes

sudo drbdadm connect --discard-my-data cluster-data # On secondary nodes

# Verify DRBD sync

sudo drbdadm status cluster-dataProblem 2: Resource Failures

# Check failed resources

sudo pcs status resources

# Clean up failed resources

sudo pcs resource cleanup cluster-web

# Move resource to different node

sudo pcs resource move cluster-web node2.cluster.local

# Remove movement constraint after testing

sudo pcs resource clear cluster-web

# Check logs for errors

sudo journalctl -u pacemaker -n 50Problem 3: Network Communication Issues

# Test cluster communication

sudo corosync-cfgtool -s

# Check firewall settings

sudo firewall-cmd --list-all

# Restart cluster services

sudo pcs cluster stop --all

sudo pcs cluster start --all

# Verify cluster membership

sudo pcs cluster pcsd-statusProblem 4: DRBD Synchronization Problems

# Check DRBD status

sudo drbdadm status cluster-data

# Force DRBD resync

sudo drbdadm invalidate cluster-data # On secondary node

# Check sync progress

watch sudo drbdadm status cluster-data

# Verify DRBD configuration

sudo drbdadm dump cluster-data📋 Simple Commands Summary

| Command | Purpose |

|---|---|

sudo pcs status | Show cluster status |

sudo pcs resource show | List all resources |

sudo crm_mon -1 | One-time cluster monitor |

sudo pcs cluster start --all | Start cluster on all nodes |

sudo pcs resource cleanup <resource> | Clean failed resource |

sudo drbdadm status | Show DRBD status |

sudo pcs stonith show | List fencing devices |

sudo pcs constraint show | Show resource constraints |

💡 Tips for Success

🎯 Test Regularly: Practice failover scenarios in development

🔍 Monitor Continuously: Set up comprehensive monitoring and alerting

📊 Document Everything: Keep detailed records of cluster configuration

🛡️ Implement Fencing: Proper fencing prevents data corruption

🚀 Performance Tune: Optimize based on your workload patterns

📝 Backup Configs: Regularly backup cluster and resource configurations

🔄 Practice Recovery: Test disaster recovery procedures regularly

⚡ Plan Capacity: Monitor resource usage and plan for growth

🏆 What You Learned

Congratulations! You’ve mastered high availability clusters on AlmaLinux! 🎉

✅ Built Pacemaker cluster with multiple nodes ✅ Configured DRBD for data replication ✅ Set up resource management and failover ✅ Implemented monitoring and alerting ✅ Created fencing mechanisms for data protection ✅ Optimized performance for production workloads ✅ Learned troubleshooting techniques for HA clusters ✅ Built enterprise-grade availability solutions

🎯 Why This Matters

High availability clusters are the backbone of modern enterprise infrastructure! 🌟 With your AlmaLinux Pacemaker cluster, you now have:

- Enterprise-grade reliability with 99.99% uptime capability

- Automatic failover that protects against hardware failures

- Scalable architecture that grows with your business needs

- Data protection through synchronized storage systems

- Professional expertise in high availability technologies

You’re now equipped to build and maintain mission-critical infrastructure that never sleeps! Your HA skills put you in the league of enterprise infrastructure architects! 🚀

Keep clustering, keep improving, and remember – in the world of high availability, every second of uptime counts! You’ve got this! ⭐🙌